"

1453R wrote:

C'mon, folks. C'mon. If you "can't even trust your eyes anymore(!!)", then I guess you're going to have to trust your brains instead, and think about the things you're being told. Maybe even - brace yourselves, this is gonna be heavy - do some fact checking or cross research before opening your yapholes on the latest scandal piece from Politically Driven "News" Network X.

My entire point is that it's obvious that this is never going to happen, that's what makes this so worrisome in the first place. The average human is dumb and lazy. Or if you want to put it in a positive way, busy with other stuff (like surviving) so he can't allocate cognitive ressources to fact checking, cross researching and critical thinking about every little thing. Which makes him very vulnerable to manipulation when we have the technological means to fake everything. It's easy to say "just do some fact checking bro" but even nowadays that shit is very time consuming and takes quite a bit of mental effort, especially with complicated topics. Good luck with that in the future when in theory everything can be fake.

GGG banning all political discussion shortly after getting acquired by China is a weird coincidence.

|

Posted byXavderion#3432on Dec 21, 2018, 12:22:21 PM

|

"

Xavderion wrote:

My entire point is that it's obvious that this is never going to happen, that's what makes this so worrisome in the first place. The average human is dumb and lazy. Or if you want to put it in a positive way, busy with other stuff (like surviving) so he can't allocate cognitive ressources to fact checking, cross researching and critical thinking about every little thing. Which makes him very vulnerable to manipulation when we have the technological means to fake everything. It's easy to say "just do some fact checking bro" but even nowadays that shit is very time consuming and takes quite a bit of mental effort, especially with complicated topics. Good luck with that in the future when in theory everything can be fake.

The solution is to halt all technological advancement right now, and keep the species perpetually on the edge of singularity without ever inventing anything new ever again.

Or, alternatively? People can stop getting butthurt over absolutely everything and quit giving the scandalmongers who'd abuse technology like this a reason to do so.

Since neither of those things are going to happen because people are clever and also people are a bunch of stupid flaming assholes, then I guess we'll get to see what happens when propagandists get to use photorealistic graphics instead of political cartoons, and how many years it takes for people to realize that they can't trust their news feeds anymore and thusly stop caring.

She/Her

|

Posted by1453R#7804on Dec 21, 2018, 12:34:20 PM

|

"

1453R wrote:

The solution is to halt all technological advancement right now, and keep the species perpetually on the edge of singularity without ever inventing anything new ever again.

Or, alternatively? People can stop getting butthurt over absolutely everything and quit giving the scandalmongers who'd abuse technology like this a reason to do so.

Since neither of those things are going to happen because people are clever and also people are a bunch of stupid flaming assholes, then I guess we'll get to see what happens when propagandists get to use photorealistic graphics instead of political cartoons, and how many years it takes for people to realize that they can't trust their news feeds anymore and thusly stop caring.

There's no solution to this problem, that's what makes it even more worrisome :D

All we can do is talk about it. It's better than not talking about it.

Btw, an apathetic populace is the best thing that can happen to political leaders. So yeah, people not caring anymore isn't exactly a good thing either.

GGG banning all political discussion shortly after getting acquired by China is a weird coincidence.

|

Posted byXavderion#3432on Dec 21, 2018, 12:46:34 PM

|

|

what's worrysome to me is that video evidence will no longer be trustable

Build of the week #9 - Breaking your face with style http://www.youtube.com/watch?v=v_EcQDOUN9Y

IGN: Poltun

|

Posted byfaerwin#5850on Dec 21, 2018, 2:11:35 PMAlpha Member

|

"

faerwin wrote:

what's worrysome to me is that video evidence will no longer be trustable

It's already not trustable. People who think that video is infallible proof of whatever is being video'd are idiots. Especially when talking about low-res security-type feeds or other shit where fine detail is lost.

Even without The Horrible Dangers of Evil A.I., there are things called actors. They can look frighteningly like someone you'd like smeared if you find the right one and release grainy enough video. There's this thing called video editing that we've been doing forever. And there's also this thing called digital forensics that's supposed to let smart people disprove doctored footage, and it'll get better as doctored footage does.

if you're complaining that you can no longer trust your favorite YouTube conspiracists or your biased news agency of choice to put out video proof of the perfidy of mankind? Good. You should never have trusted it anyways. Skepticism is love. Skepticism is life. Especially these days.

She/Her

|

Posted by1453R#7804on Dec 21, 2018, 2:37:59 PM

|

AI Drones are scarier to me than this fake people thing.

Seems I'm not alone with noticing the cats

"

Cats of Cthulhu! Sure, some look okay at a glance, but others are writhing, misshapen cat-esque horrors. At first it seems odd the AI could nail down human features, hair, freckles, wrinkles, and even eyeglasses so astutely, and then the very same AI could turn around and generate a cat that looks like it's passed through a meat grinder. But then I suppose faces are one thing and entire bodies are something else. If Nvidia's AI tried to generate and entire naked human (and I really hope it does because I'd like to see that) the results might not be any better.

I also like that a few of the cat images produced even have a bit of AI-generated meme text built in. Point a computer at a cat library on the internet, you're gonna get some memes in there, I guess. Maybe we shouldn't be teaching our AI to learn unsupervised. Keep it off Reddit, at least, until it's bit older.

My favorite AI generated cat is The One I'm calling Void Cat:

I think It's cute, don't you? I'm hoping to make It the new mascot of PC Gamer. Yes, if you gaze upon Void Cat too long It will devour your soul and leave you a barren, withered husk, but Void Cat is still adorable in Its own way. I'm not sure if that's its tail or perhaps Its foot (one of thirteen, I'm guessing) covering Its mouth (?) as It deliberates whether or not to spare you from the unknowable, unending abyss.

I'm guessing I will find out soon. We all will find out soon. It won't be long, now. It won't be long.

PC Gamer

"

We may already feel cozy about artificial intelligence making ordinary decisions for us in our daily life. From product and movie recommendations on Netflix and Amazon to friend suggestions on Facebook, tailored advertisements on Google search result pages and auto corrections in virtually every app we use, artificial intelligence has already become ubiquitous like electricity or running water.

But what about profound and life-changing decisions like in the judiciary system when a person is sentenced based on algorithms he isn’t even allowed to see.

A few months ago, when Chief Justice John G. Roberts Jr. visited the Rensselaer Polytechnic Institute in upstate New York, Shirley Ann Jackson, president of the college, asked him “when smart machines, driven with artificial intelligences, will assist with courtroom fact-finding or, more controversially even, judicial decision-making?”

The chief justice’s answer was truly startling. “It’s a day that’s here,” he said, “and it’s putting a significant strain on how the judiciary goes about doing things.”

In the well-publicized case Loomis v. Wisconsin, where the sentence was partly based on a secret algorithm, the defendant argued without success that the ruling is unconstitutional since neither he nor the judge were allowed to inspect the inner workings of the computer program.

Northpointe Inc., the company behind Compas, the assessment software that deemed Mr. Loomis of having an above the average risk factor, was not ready to disclose its algorithms, and said earlier last year, “The key to our product is the algorithms, and they’re proprietary.”

“We’ve created them, and we don’t release them because it’s certainly a core piece of our business,” one of its executives added.

Computationally calculated risk assessments are increasingly common in U.S. courtrooms and are handed to judicial decision makers at every stage of the process. These so-called risk factors help judges to decide about the bond amounts, how harsh the sentence should be or even whether the defendant could be set free. In Arizona, Colorado, Delaware, Kentucky, Louisiana, Oklahoma, Virginia, Washington and Wisconsin, the results of such assessments are given to judges during criminal sentencing.

another piece:

"

AI does not need to define us or replace us; we have the opportunity to define AI in the context of corporate communications, which includes both external and internal communications. AI technology itself is neither good nor bad. In fact, it is a reflection of the heart of the person using it or unleashing it through automation. Just as the internet has done for more than two decades, it can reveal as much moral clarity as it can moral depravity. Someone can use the internet to spread false or misleading information just as much as to post truthful information.

However, at a higher level, AI’s real value is in enhancing, supporting and amplifying human truth, human experience and, ultimately, human freedom. And I believe that organizations will increasingly become the purveyors of these things in the future. Ironically, AI can help enhance what it means for us to be human.

Anthony Petrucci is Senior Director of Corporate Communications & Public Affairs at HID Global, a technology company in Austin, Texas.

That last one, just ... yeah. Purveyors of freedom?

|

Posted byerdelyii#5604on Dec 21, 2018, 6:11:35 PM

|

"

1453R wrote:

"

faerwin wrote:

what's worrysome to me is that video evidence will no longer be trustable

It's already not trustable. People who think that video is infallible proof of whatever is being video'd are idiots. Especially when talking about low-res security-type feeds or other shit where fine detail is lost.

Even without The Horrible Dangers of Evil A.I., there are things called actors. They can look frighteningly like someone you'd like smeared if you find the right one and release grainy enough video. There's this thing called video editing that we've been doing forever. And there's also this thing called digital forensics that's supposed to let smart people disprove doctored footage, and it'll get better as doctored footage does.

if you're complaining that you can no longer trust your favorite YouTube conspiracists or your biased news agency of choice to put out video proof of the perfidy of mankind? Good. You should never have trusted it anyways. Skepticism is love. Skepticism is life. Especially these days.

ya, its already there.

https://www.youtube.com/watch?v=724JhpqKEmY

this thread is talking about an AI being able to make these lifelike faces etc. Human beings can cgi peoples faces already, thats already achievable, already being done, you can already completely fake a video of someone doing something if u want to go to the effort of doing it.

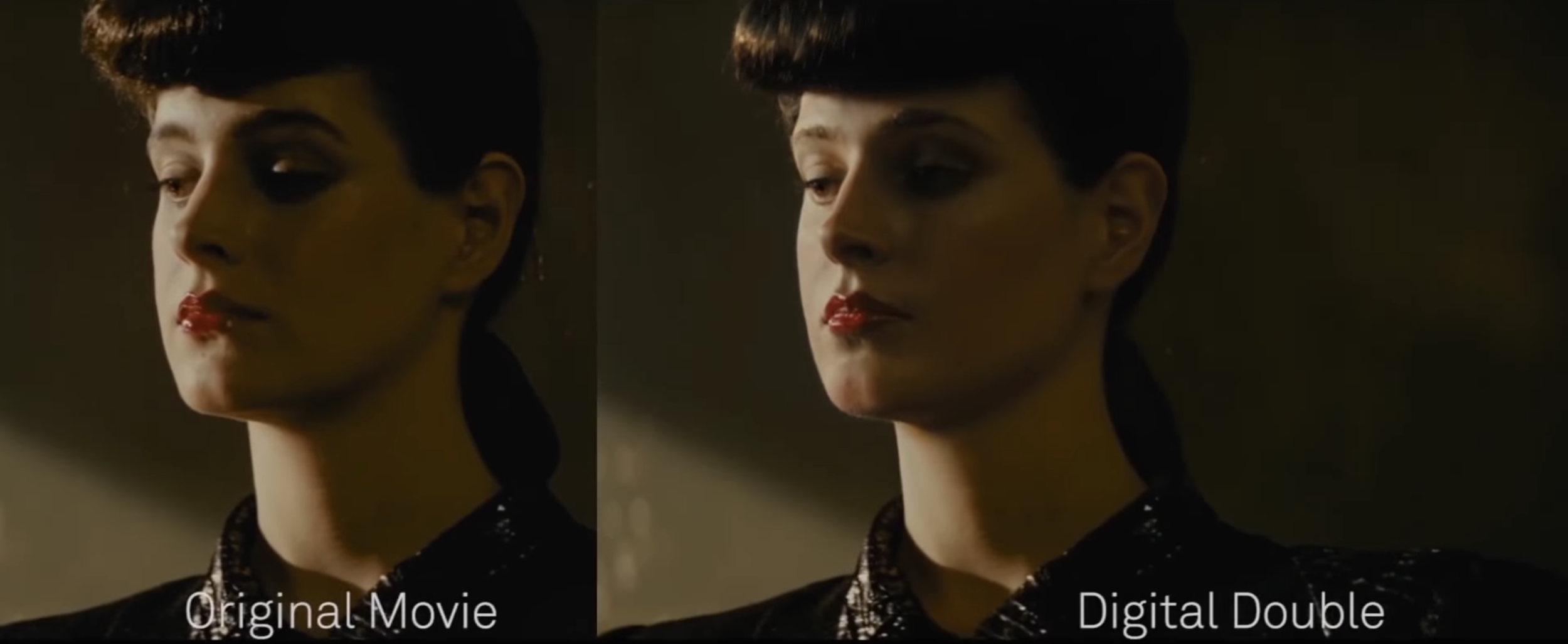

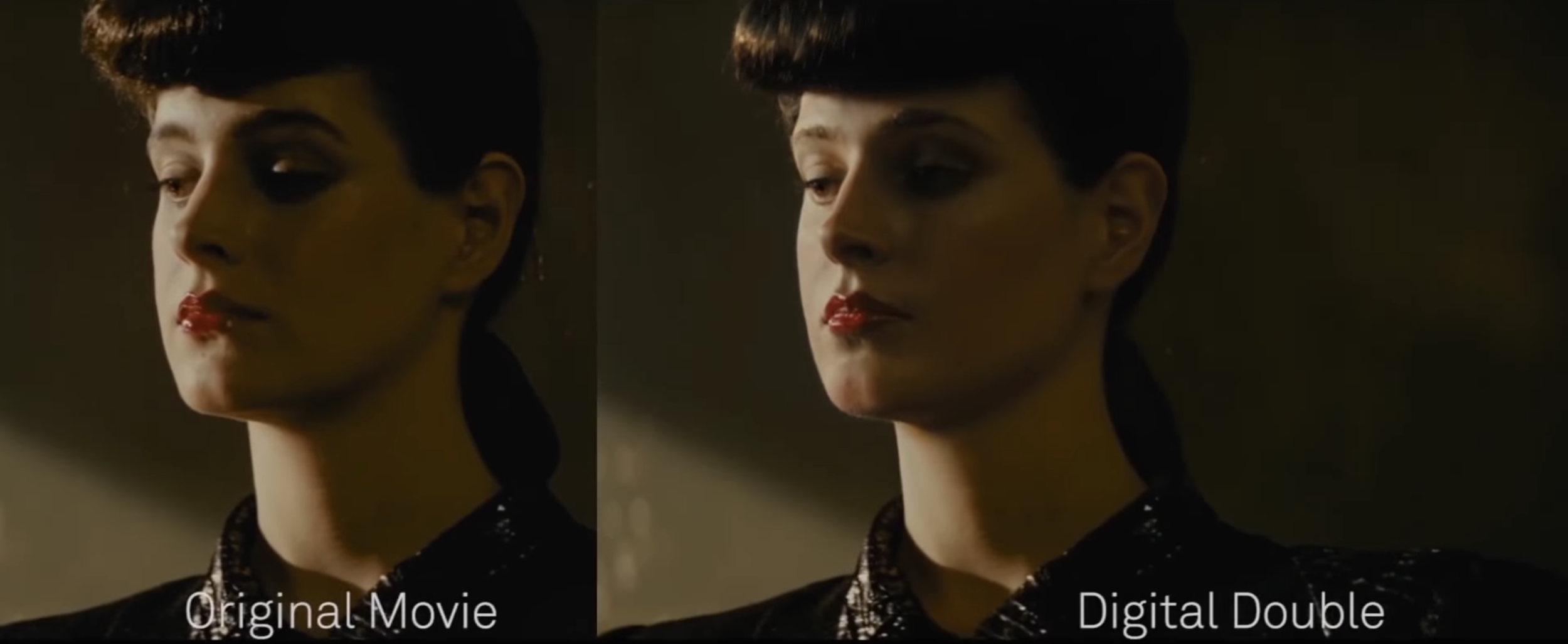

actual rachel from the late 80s...

cgi rachel in 2017

they can put it on a 4k res imax screen and you cant tell the difference sitting in front of it. If they can do this they can make some low res security cam footage of anyone doing anything, they could have done that 10 years ago, probably more.

this is the cgi rachel 'acting' the same scene from the original 80s bladerunner movie as the original actual actor side by side

https://static1.squarespace.com/static/51b3dc8ee4b051b96ceb10de/t/5a4ce73553450a16cf3a9321/1514989384490/blade-runner-2049-behind-the-scenes-video-shows-the-vfx-magic-behind-recreating-replicant-rachel1?format=2500w

https://static1.squarespace.com/static/51b3dc8ee4b051b96ceb10de/t/5a4ce73553450a16cf3a9321/1514989384490/blade-runner-2049-behind-the-scenes-video-shows-the-vfx-magic-behind-recreating-replicant-rachel1?format=2500w

I love all you people on the forums, we can disagree but still be friends and respect each other :)

|

Posted bySnorkle_uk#0761on Dec 22, 2018, 1:14:24 AM

|

|

They probably spend millions and looks fairly good in a couple still images but it's not really hard to tell it's not her. That tech is gonna take awhile.

|

Posted bySnowCrash#1157on Dec 22, 2018, 1:47:20 AM

|

it doesnt look good in a couple of stills, it looks perfect in full motion in the highest possible res on a cinema screen. You would not know it wasnt her if you didnt already know its not her. Theres no way they spent millions doing that, it took some guys 4 weeks, its a small scene.

https://youtu.be/fV34mT5m0bM?t=51

What video evidence for anything has been shot on 4k with cinema quality lighting etc? The ability to fake video evidence for stuff is something we are so far beyond at this point.

this is what video evidence of real life events looks like

you could have faked that in the 80s with a rubber mask. I love all you people on the forums, we can disagree but still be friends and respect each other :)

|

Posted bySnorkle_uk#0761on Dec 22, 2018, 2:49:56 AM

|

|

Go actually watch the first blade runner or dune or any movie she was in and then compare it. I knew it wasn't her because it's really hard to fake a real person. Once you've seen a person in dozens of movies this scene doesn't look right. It wasn't laughably bad and it's getting closer but it's still easy to spot a fake.

You think the best CGI guy working on 1 scene for 4 weeks didn't cost a ton?

The difference in your example is one video would be altered and one wouldn't be. Besides the real world is much more complex then a movie scene.

|

Posted bySnowCrash#1157on Dec 22, 2018, 7:02:14 AM

|